3 features outside of test execution that teams should automate

Test automation is about more than just executing tests. By integrating these three types of tools into a test automation workflow, organizations can expect to see their productivity increase substantially, while also improving the reliability and validity of their products and test projects.

While all test tools are expected to automate the execution itself, these activities only cover one subset of a test workflow. In this article, we’ll cover three other aspects of testing that can and should be automated:

Test case creation and requirement implementation

Reporting services, data analytics and data export

Scheduling tests

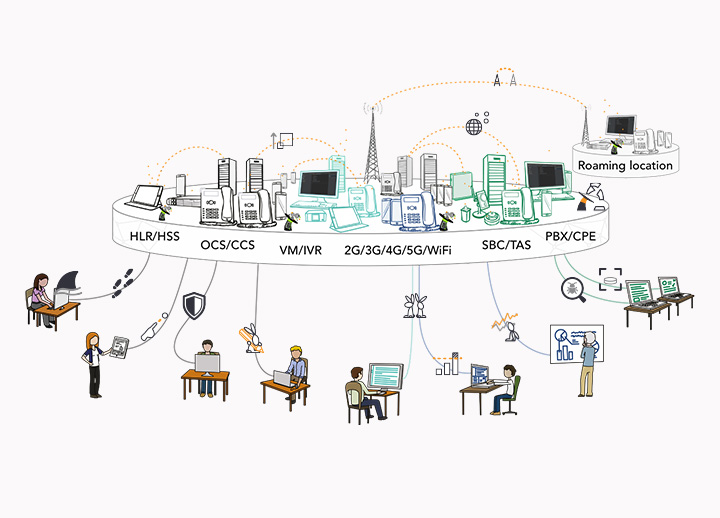

As automation scales up and integrates additional functionality, this leads to greater productivity and output, while reducing resource costs. For example, automated scheduling can reduce the number of test automation licenses needed by assigning certain tests to Agents or Bots under one license, which can execute the same amount of test cases as multiple test engineers. Likewise, automating test case creation helps teams start testing sooner and reduce the amount of time spent implementing changes or fixes across entire projects.

A commonality of these three features or tools is that they free up time used on manual tasks, enabling teams to spend more time improving their workflows and solving defects. Each feature helps improve reliability and validity of tests and their results by preventing human error as well as generating additional data that can be helpful for identifying what works as expected and what doesn’t.

Feature 1

Model-based test case creation and management

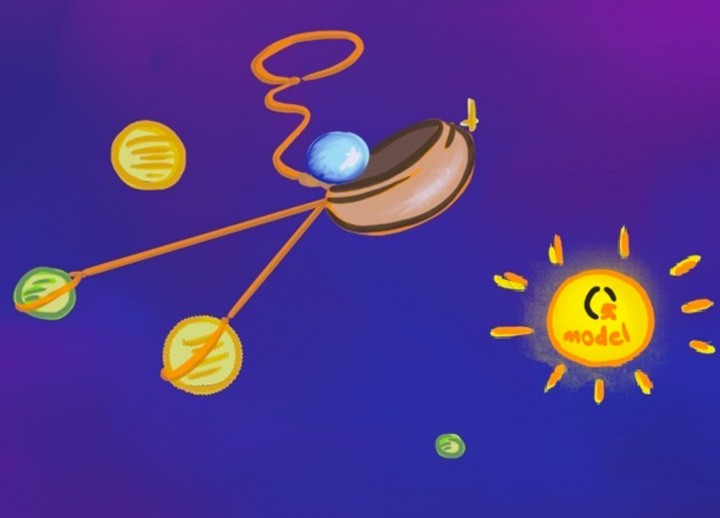

Creating a suite of test cases for a project is a time-consuming activity, but absolutely necessary for ensuring proper test coverage. For example, test case development may include manually transforming requirements into steps or models, which makes test cases prone to human error such as typos and incorrect interpretations of requirements. One solution to this problem is using model-based test tools that can also help with scaling up test coverage.

Model-based testing (MBT) derives suites of test cases from models and test requirements. These models often look like flow charts or decision tables, and include components such as:

- Variables and variable types

- Parameters

- Actions or “decisions”

- Inputs and outputs

Once defined, the model’s output consists of x amount of test cases. These can be imported into a test automation application and executed, or may be automatically deployed to an intelligent scheduler.

A huge benefit of MBT is that models can be tweaked or updated and the changes are automatically propagated throughout the entire test project, thereby reducing the amount of maintenance work or corrections to a test project. At the same time, some MBT tools require advanced programming skills or familiarity with fields of mathematics like combinatorial logic. Therefore, when considering whether or not to use MBT, it is useful to ask questions such as:

- What type of programming and mathematical knowledge, if any, is required?

- How does the output from the model look?

- Does the MBT tool integrate with testing frameworks, and if so, which ones?

- How do you convert requirements into test cases with MBT?

While automated test case creation might sound useful, and indeed can be very useful — especially when large sets of test cases need to be adjusted or adapted to other projects — it might not be the optimal choice for a test team’s first project. For example, writing test cases manually is highly recommended for getting familiar with newly-adopted test software as well as for exploratory testing.

Feature 2

Reporting

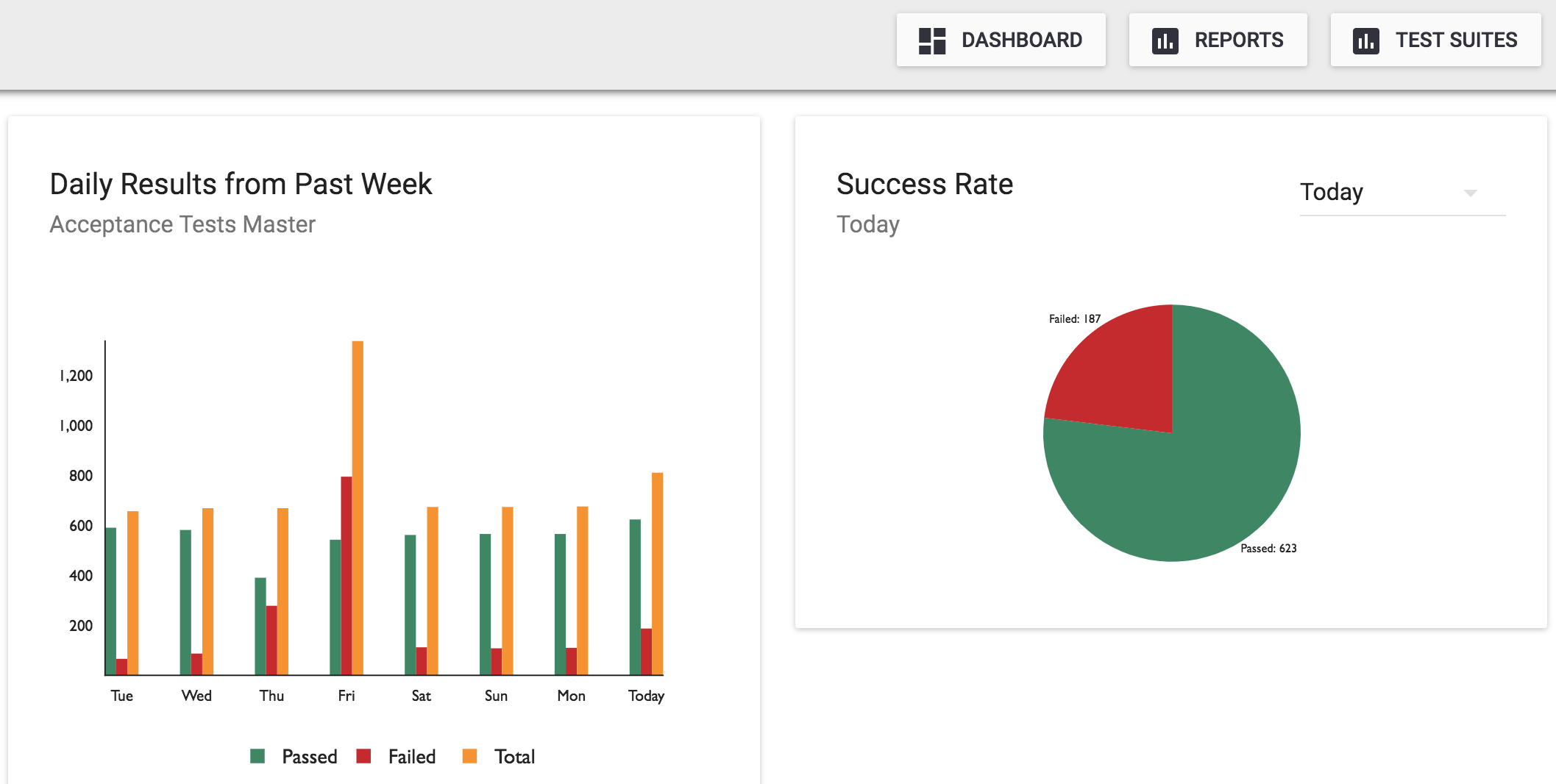

Test output appears in forms, such as logs and reports. Depending on the automation tool, test output varies from one product to the next. Some software include automated reporting as part of the software or as an extension. On the other hand, some test tools provide limited data about the results, such as decentralized logs that the user must parse themselves.

Automating test results and reports can eliminate the need to perform manual activities, such as updating spreadsheets and collecting test results from different users or machines. Reporting services may include the following features:

- Charts and graphs

- Approval workflows

- Automated defect and issue tracking

- Error categorization

- Integration with ticketing systems and external databases

By using such reporting services, testers and project managers can save several hours per week of administrative work. In addition, an automated reporting prevents data entry errors and streamlines how test results are collected and interpreted.

When looking for test tools that include automated reporting, it’s helpful to ask:

- Who can access the reporting service?

- Does it require programming knowledge or support from IT/Infrastructure?

- Can data be synchronized with other tools such as HP ALM or JIRA?

- Where is the data hosted?

- What types of categorization is available?

Customers that already have robust data analysis tools and workflows may prefer to use their own tooling. However, test automation software that comes with its own reporting and analytic tools ensure a more convenient approach to issues such as approval workflows, bug and issue tracking and parsing categories and values correctly for further analysis. Additionally, when test data must be shared between multiple parties, such as customer and client, who use different project management tools, reporting services that automatically synchronize with external tools such as JIRA or HP ALM can save a lot of time and resources that might otherwise be spent manually ensuring that data is compliant in all its contexts.

Feature 3

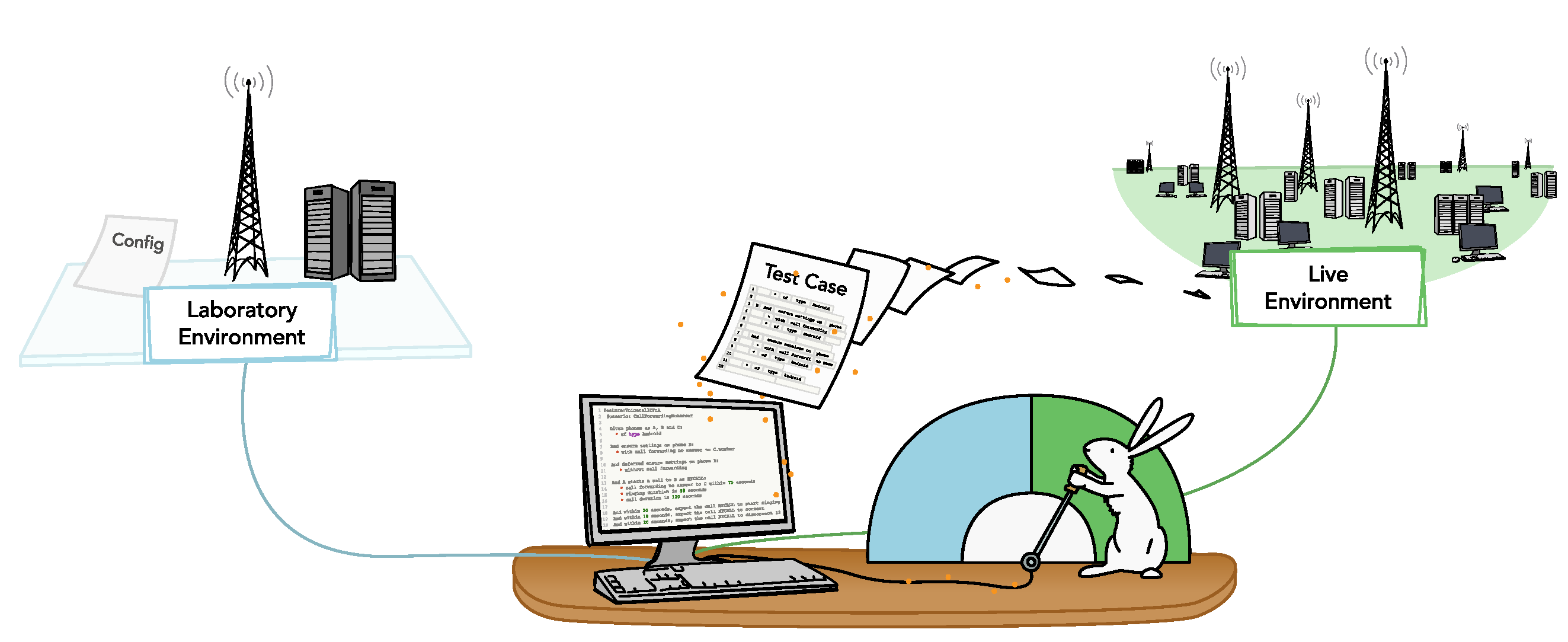

Scheduling tests

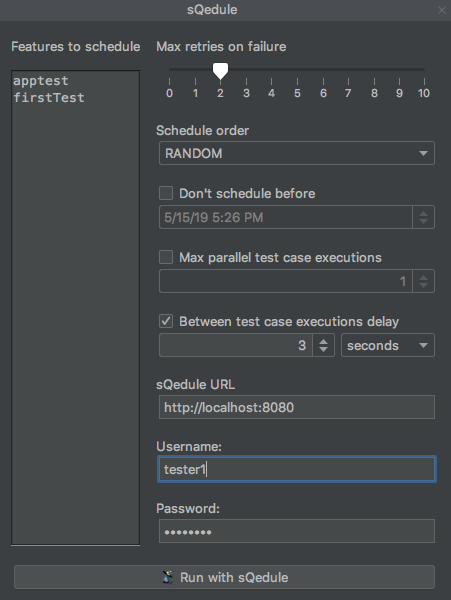

In addition to test case creation and reporting, automated test scheduling provides opportunities for eliminating inefficient time use by assigning test cases to Agents or Bots that execute test cases. Automated or “intelligent” scheduling is especially useful for tests that must be run outside of working hours or are very long. It is also a highly productive approach to running regression tests or tests that must be re-run multiple times.

Allocating certain types of test cases or projects to an intelligent scheduler can help organizations reduce the number of test automation licenses that they need, as intelligent scheduling can execute test cases on a very high scale compared to human testers. Therefore, using a combination of test engineers for complex test cases that require human supervision and assigning other tests to Agents is a great way to achieve a high return on investments made in test automation.

Features that automated scheduling tools, such as QiTASC’s sQedule may provide include:

- Different types of test ordering, such as random or sequential

- Parallel execution by multiple Agents

- Automated retries for failed test cases

- Delays between test cases, in case of “cool down periods”

- Resource-aware scheduling, which prioritizes test cases for which all necessary devices are available

On top of improving productivity, automated scheduling can help ensure better test data by creating configurations that only allow certain tests to be run. For example, those where all resources (such as phones and internet connections) are available. Likewise, schedulers can choose to only execute tests that are tagged as being suitable for execution, while excluding cases that aren’t suitable for execution (such as tests with known defects).

When looking for test tools, the follow questions will help you understand to what degree they can automate test scheduling:

- Does it support time constraints, such as only running a test case on weekends or at certain times of day?

- Can it be used remotely?

- Is it available as a user interface and/or via the command line?

- What devices and configuration switches does it recognize?

- How does it handle failed test cases?

- What is a good combination of test engineers vs. scheduling Agents to use in my project?

Conclusio

In this article, we covered three testing features that can be automated in addition to the execution itself: test case creation and management, reporting and scheduling.

Model-based testing (MBT) tools like disQover* help scale up test creation and management. This is done by creating models of Flows, from which test suites are derived. These test suites contain scenarios representing all possible combinations according to the parameters and restrictions created by the user, and are easy and quick to adapt as a project changes. Reporting tools, such as conQlude streamline test case reporting, defect management and workflow approval by recognizing and organizing test data. Such reporting interfaces are often compatible with external project management tools such as JIRA and HPALM, which is especially useful for ongoing projects with multiple sprints. Finally, intelligent schedulers, such as sQedule prioritize test cases, associated devices and timing issues so that the most optimal combination of test cases is always executed. These tools also reduce workloads and even license needs, by handing over certain tests to Agents, while giving testers more time to focus on complex test cases, troubleshooting and other important activities.

By integrating these types of tools into a test automation workflow, organizations can expect to see their productivity increase substantially, while also improving the reliability and validity of their products and test projects.

* the product disQover is currently not available