3 key questions that will match the right test automation tool to your team

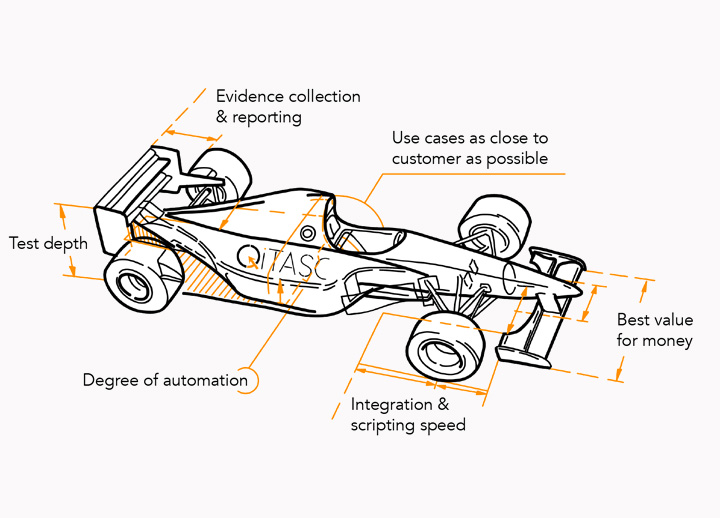

Picking the right test automation solution requires evaluating both the software itself and the people who will be using it. We’ve created the following guide to help you consider which tools are the best match for your team and why.

Picking the right test automation solution requires evaluating both the software itself and the people who will be using it. This article focuses on three key questions that will highlight the best test tool for your team. Before even thinking about different testing options, the following questions are critical for considering which tools you might use and why:

Question 1: What Are the Different Language Layers?

Question 2: What Are the Different Ways to Interpret Test Results?

Question 3: What Is Automated and What Isn’t?

By asking these questions and framing them in terms of your team members, their roles and their technical skills, you’ll have a better idea of what to look for (and what not to look for) in a testing solution. This is essential for achieving project goals, developing an efficient testing workflow, and keeping everybody motivated.

Who will be using the software and what is their technical knowledge?

Different test automation tools require varying levels of technical expertise, programming knowledge and IT integration. Furthermore, it’s likely that team members without a technical background will require some familiarity with the software that you choose to test with.

While open source options, such as Selenium and Appium are readily available, they usually require programming skills and a familiarity with languages such as C#, Python or Java to create and maintain test cases. Commercial test automation solutions, on the other hand, often include user interfaces and built-in functionality that help non-experts create, interpret and execute test cases, while providing additional language layers for advanced users to write custom functions and models.

Evaluate your team’s skills and needs

Since testers won’t be the only people interacting with a test automation tool, there might be a significant variation of understanding among team members of what goes on in a project. Thus, when thinking about who will have test automation know-how, it’s helpful to evaluate:

Who are the different users and what are their roles?

- For example, test engineers, project managers and marketing

What technical skills does each user type have, and what technical skills do they lack?

- For example, junior vs. senior testers with different levels of programming knowledge

- IT and DevOps, who integrate the test software into the System Under Test (SUT)

- Marketing personnel, who need to interpret results and translate them into meaningful information for diverse audiences

What types of features are of greatest importance to each user type? What will they use these features for?

- For example, can tests be written by junior testers without coding knowledge?

- Are logs or reports centrally-accessible and do they display a “bird’s eye” view to project management and marketing?

Develop criteria and questions from your evaluation

After determining the different potential user types, you can translate these skills and needs into criteria or questions for judging different test automation tools. In the list of questions about members of your test team, there’s some hints as to what these questions could look like.

Question 1

What are the different language layers?

Language layers refer to different levels of abstraction. Layers with a high level of abstraction give a general, comprehensive overview of the testing activities and often includes templates or predefined features and steps. Such languages, like Gherkin, are often natural-sounding and easy to read and understand by non-experts.

On the other hand, a language layer with a low abstraction level breaks general components down into smaller pieces and involves a greater amount of complexity linking the pieces together. This might involve defining functions and models, integrating external programming languages, and requires at least intermediate programming skills.

Example: UI steps feature file

High level steps make it easy to describe and understand the features and functionality being tested. The intaQt® Feature File below shows a test that:

- Opens a browser

- Opens a webpage

- Clicks on an element two times

- Waits for five seconds

Becoming proficient at writing and executing such tests requires minimal training, while explaining what the test does to other stakeholders is fairly straightforward:

Feature: WebtestFeature

Scenario: WebtestScenario

Given a firefox browser as mySession

And on mySession, open Webpage and click element 2 times

And wait for 5 secondsExample: UI steps functions

In a test tool that also includes a low level of abstraction, the Feature File above would be complemented by custom functions that define how each step will actually behave; for example, the webpage elements and the actions performed on them.

Creating these functions would be the responsibility of intermediate or senior testers who have coding experience and can better abstract the interactions that they need to define between each step. However, the language reads well even for novices, which this supports the fast learning of web and app automation:

stepdef "open Webpage and click element {} times" / xTime /

println(xTime)

myView := open myExampleView

WebtestExampleModel.clickOstrich(xTime)

delay(2)

Page.close()

end

model WebtestExampleModel

func clickOstrich(countClicks)

countClicks := countClicks + 0 //int to BigDecimal

myView := await myExampleView

x := 0

for x in range(0, countClicks)

myView.doClick()

end

end

end

view myExampleView

url "https://instantostrich.com/"

elem videoField := "//*[@id='video']"

action (videoField) doClick := click

endDoes my team’s expertise match the language layers?

After evaluating the people who will be involved in your test project, as well as their skills, you should have a better idea of which levels of abstraction or language layers are the most practical. For example, if you have a mix of junior and senior testers, you’ll want a tool that allows for both high and low levels of abstraction.

It’s also important to take a look at what type of documentation, training options and customer support comes with each test tool. For example, having access to tutorials and online training might be more important to newer users, while advanced users may prefer to have technical documentation that demonstrates how to integrate with certain interfaces or protocols.

Question 2

What are the different ways to interpret test results?

Test results and data are often difficult to interpret and troubleshoot — and sometimes even difficult to find. Additionally, output can vary depending on the language layers or levels of abstraction that we described above. For example, server logs display low-level, detailed information that is helpful for debugging, but are cumbersome for users to read through, especially if they have limited technical skills. On the other hand, reporting interfaces that show high-level information such as executed steps, their outcomes and error messages are much more accessible to all user types. Additionally, many teams synchronize their test output with external tools such as project management databases.

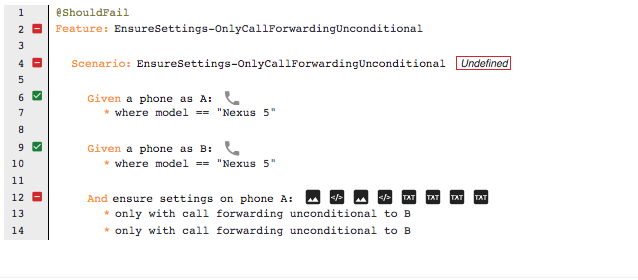

Log output

Log output, like in the example below, does contain an error message about incompatible step details when ensuring call forwarding. However, it might not be obvious how to read through the log it or what to look for. This is because such server logs include all log messages including protocol logs and debug information in addition to the test’s steps and their outcome.

Often developers or more advanced testers consult logs to find clues to failures or to check if any obscure issues are present in a test case and the system under test.

While server logs aren’t exactly user-friendly to everyone, it’s likely that during the course of a test project, a senior tester or IT member will want to examine the logs. Therefore, it’s important to know:

- What type of logs are generated by the test automation software

- If logs are available in a centralized location or only on the user’s machine

- Who can access the logs

- If log data is easily parseable

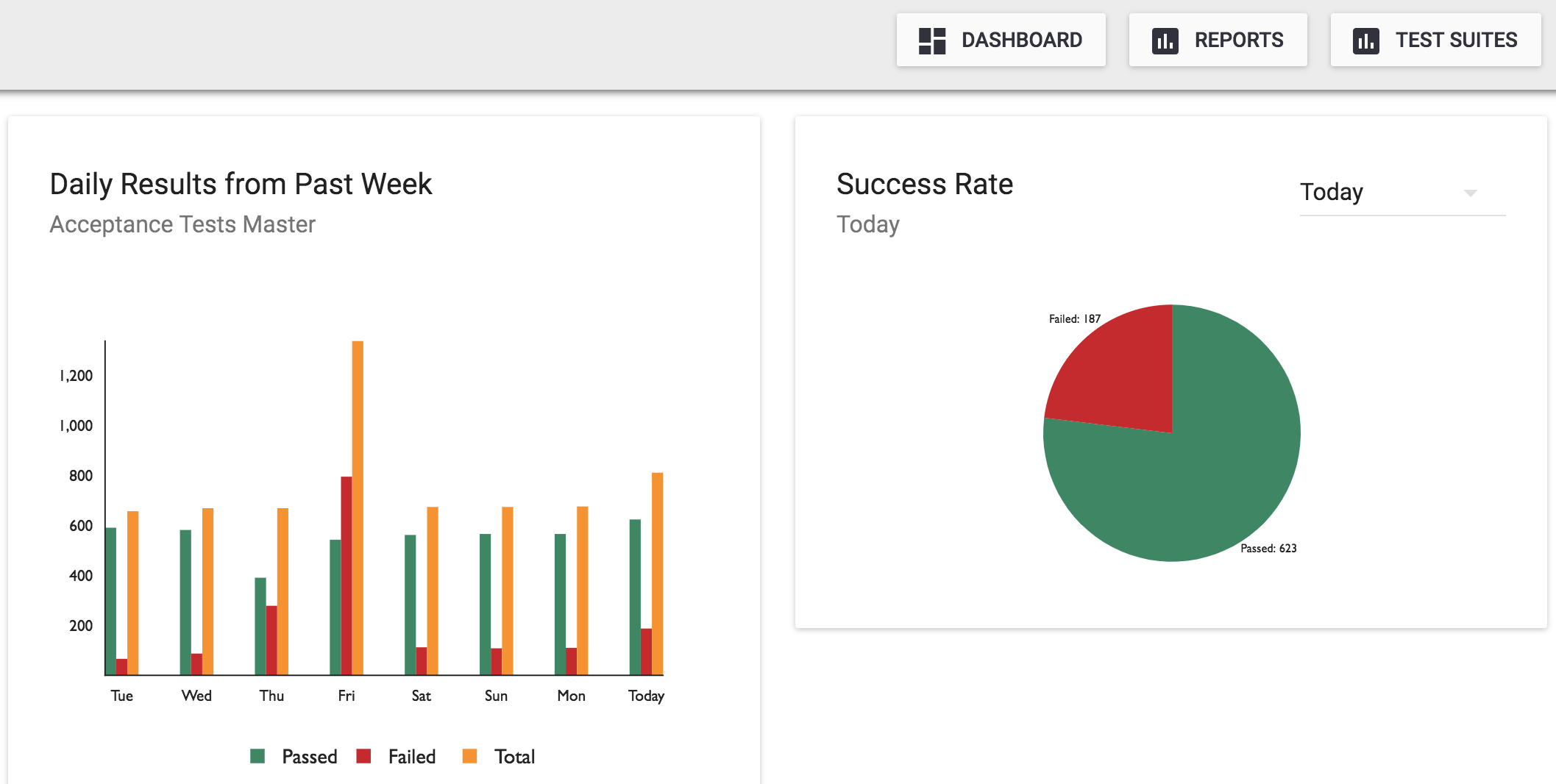

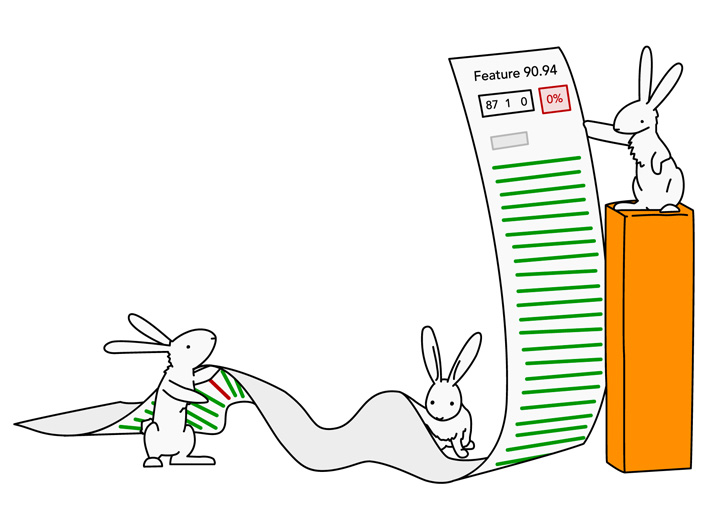

Reporting and summaries

While having access to logs is critical for troubleshooting and debugging, having access to a quick overview of results from test cases and projects as a whole helps a lot with abstracting results and being able to get an immediate understanding of pass/fail rates, common errors and bug tracking. Because of the user-friendliness and ease of abstraction, reporting services are extremely useful for communicating results to non-technical audiences who are interested in a project’s outcome. For example, QiTASC’s conQlude reporting service would depict the same log output that we showed above in a report like the one below.

This report draws the user’s attention to the error category and on which step the test case failed. It does this by showing the Feature File that uses a high-level test language and highlighting important information.

Having the option to further define and group error categories is useful for several reasons:

- They give a general overview of common errors

- Categories that reflect user errors provide insight about training or re-training needs

- Error categories can be further grouped by additional criteria such as severity level, device types or test phase

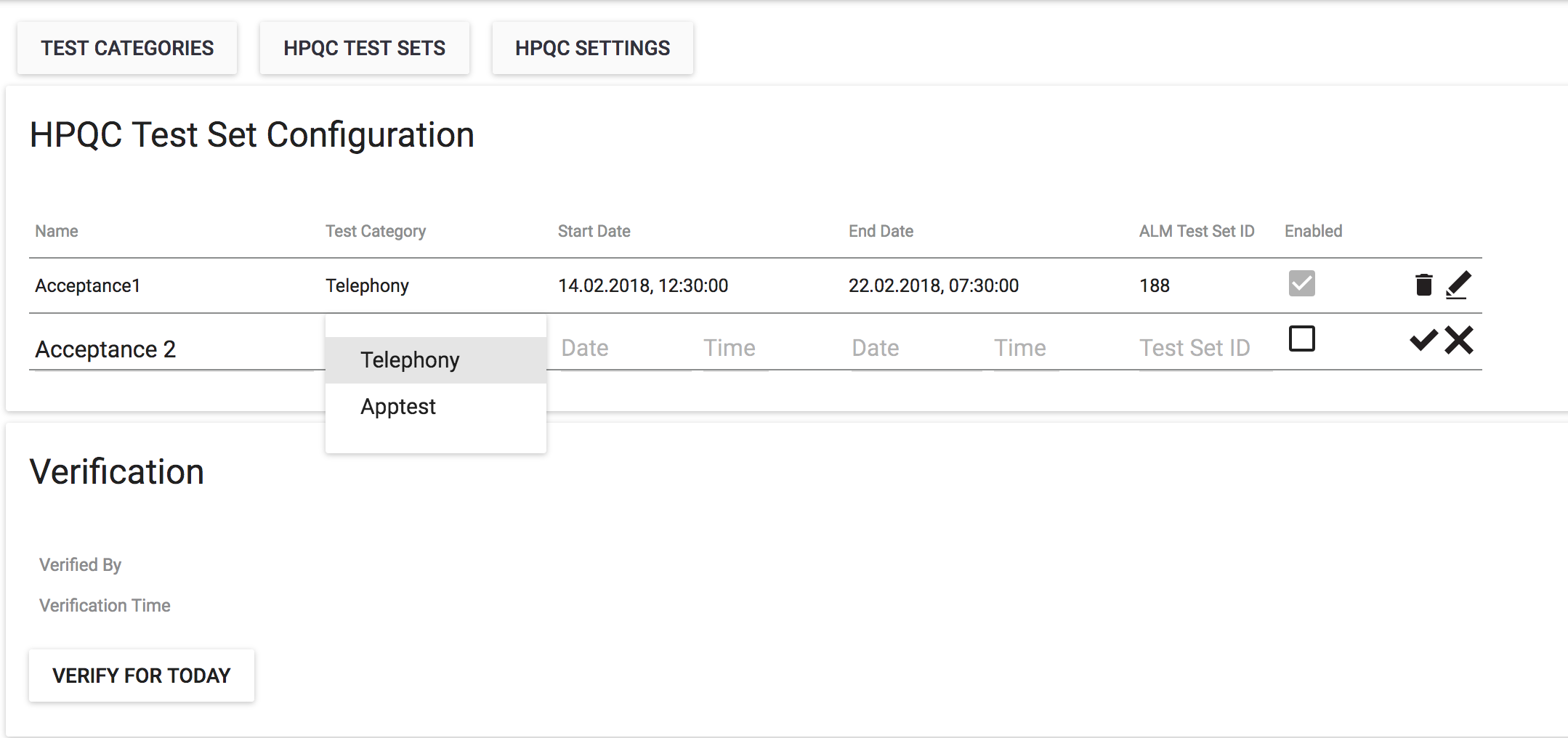

Synchronization with other tools and data export

In addition to the actual test output, teams often need to synchronize results internally or to external customers. For example, internally a team may use JIRA to track their test executions and defects, while the customer uses HP ALM. Certain reporting tools, such as QiTASC’s conQlude simplify this because it can automatically synchronize to both systems. Being able to download data as CSVs or other types of parseable data may also be important for data analysts and project managers who wish to track certain metrics or KPIs.

Is the test output relevant for my team?

Once you’ve taken a look at types of available test output generated by a test automation tool, you can evaluate if the levels of complexity are useful for your team, or if it would cause too much additional effort to interpret. For example, if you have an in-house data analysis team, log output without a reporting service might be sufficient. However, for smaller organizations, reporting tools provide clear summaries about a project’s progress and can be quickly and easily shared with non-technical stakeholders including customers and investors.

Question 3

What is automated and what is not?

The previous two questions described issues surrounding languages that support test case development and the ways that results are interpreted. However, these only briefly touch on issues of automation itself. While all test tools are expected to automate the execution itself, these activities only cover one subset of a test workflow. In this section, we’ll cover three other aspects of testing that can and should be automated:

- Test case creation and requirement implementation

- Reporting services, data analytics and data export

- Scheduling tests

As automation scales up and integrates additional functionality, this leads to greater productivity and output, while reducing resource costs. For example, automated scheduling can reduce the number of test automation licenses needed by assigning certain tests to Agents or Bots under one license, which can execute the same amount of test cases as multiple test engineers. Likewise, automating test case creation helps teams start testing sooner and reduce the amount of time spent implementing changes or fixes across entire projects.

A commonality of these three features or tools is that they free up time used on manual tasks, enabling teams to spend more time improving their workflows and solving defects. Each feature helps improve reliability and validity of tests and their results by preventing human error as well as generating additional data that can be helpful for identifying what works as expected and what doesn’t.

Model-based test case generation

Creating a suite of test cases for a project is a time-consuming activity, but absolutely necessary for ensuring proper test coverage. For example, test case development may include manually transforming requirements into steps or models, which makes test cases prone to human error such as typos and incorrect interpretations of requirements. One solution to this problem is using model-based test tools that can also help with scaling up test coverage.

Model-based testing (MBT) derives suites of test cases from models and test requirements. These models often look like flow charts or decision tables, and include components such as:

- Variables and variable types

- Parameters

- Actions or “decisions”

- Inputs and outputs

Once defined, the model’s output consists of `X` amount of test cases. These can be imported into a test automation application and executed, or may be automatically deployed to an intelligent scheduler.

A huge benefit of MBT is that models can be tweaked or updated and the changes are automatically propagated throughout the entire test project, thereby reducing the amount of maintenance work or corrections to a test project. At the same time, some MBT tools require advanced programming skills or familiarity with fields of mathematics like combinatorial logic. Therefore, when considering whether or not to use MBT, it is useful to ask questions such as:

- What type of programming and mathematical knowledge, if any, is required?

- How does the output from the model look?

- Does the MBT tool integrate with testing frameworks, and if so, which ones?

- How do you convert requirements into test cases with MBT?

While automated test case creation might sound useful, and indeed can be very useful — especially when large sets of test cases need to be adjusted or adapted to other projects — it might not be the optimal choice for a test team’s first project. For example, writing test cases manually is highly recommended for getting familiar with newly-adopted test software as well as for exploratory testing.

Reporting

As mentioned in the previous question, test output appears in forms, such as logs and reports. Depending on the automation tool, test output varies from one product to the next. Some software include automated reporting as part of the software or as an extension. On the other hand, some test tools provide limited data about the results, such as decentralized logs that the user must parse themselves.

Automating test results and reports can eliminate the need to perform manual activities, such as updating spreadsheets and collecting test results from different users or machines. Reporting services may include the following features:

- Charts and graphs

- Approval workflows

- Automated defect and issue tracking

- Error categorization

- Integration with ticketing systems and external databases

By using such reporting services, testers and project managers can save several hours per week of administrative work. In addition, an automated reporting prevents data entry errors and streamlines how test results are collected and interpreted.

When looking for test tools that include automated reporting, it’s helpful to ask:

- Who can access the reporting service?

- Does it require programming knowledge or support from IT/Infrastructure?

- Can data be synchronized with other tools such as HP ALM or JIRA?

- Where is the data hosted?

- What types of categorization is available?

Customers that already have robust data analysis tools and workflows may prefer to use their own tooling. However, test automation software that comes with its own reporting and analytic tools ensure a more convenient approach to issues such as approval workflows, bug and issue tracking and parsing categories and values correctly for further analysis. Additionally, when test data must be shared between multiple parties, such as customer and client, who use different project management tools, reporting services that automatically synchronize with external tools such as JIRA or HP ALM can save a lot of time and resources that might otherwise be spent manually ensuring that data is compliant in all its contexts.

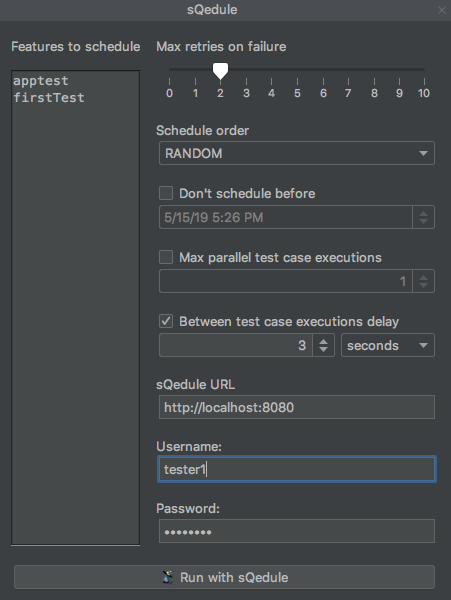

Scheduling tests

In addition to test case creation and reporting, automated test scheduling provides opportunities for eliminating inefficient time use by assigning test cases to Agents or Bots that execute test cases. Automated or “intelligent” scheduling is especially useful for tests that must be run outside of working hours or are very long. It is also a highly productive approach to running regression tests or tests that must be re-run multiple times. Allocating certain types of test cases or projects to an intelligent scheduler can help organizations reduce the number of test automation licenses that they need, as intelligent scheduling can execute test cases on a very high scale compared to human testers. Therefore, using a combination of test engineers for complex test cases that require human supervision and assigning other tests to Agents is a great way to achieve a high return on investments made in test automation.

Features that automated scheduling tools, such as QiTASC’s sQedule may provide include:

- Different types of test ordering, such as random or sequential

- Parallel execution by multiple Agents

- Automated retries for failed test cases

- Delays between test cases, in case of “cool down periods”

- Resource-aware scheduling, which prioritizes test cases for which all necessary devices are available

On top of improving productivity, automated scheduling can help ensure better test data by creating configurations that only allow certain tests to be run. For example, those where all resources (such as phones and internet connections) are available. Likewise, schedulers can choose to only execute tests that are tagged as being suitable for execution, while excluding cases that aren’t suitable for execution (such as tests with known defects).

When looking for test tools, the follow questions will help you understand to what degree they can automate test scheduling:

- Does it support time constraints, such as only running a test case on weekends or at certain times of day?

- Can it be used remotely?

- Is it available as a user interface and/or via the command line?

- What devices and configuration switches does it recognize?

- How does it handle failed test cases?

- What is a good combination of test engineers vs. scheduling Agents to use in my project?

Conclusio

In this article, we covered three questions that you should always ask and deliberate upon when choosing a test automation solution. The first question relates to who will be using the test tool and what type of technical expertise they possess. This question strongly impacts the following two issues: what type of test output is generated and what is actually automated. These should all be considered within the context of who will be using the applications, what type of technical knowledge they possess, and what problems they need test automation to solve.

Level of abstraction and test languages should match the users and their level of expertise. As we described earlier, high-level languages such as those in Feature Files are easy to master for beginners, while complex, low-level functionality should be implemented and maintained by those with technical or programming experience. When looking at test output, logs provide useful troubleshooting and debugging information. However, they are cumbersome to interpret, especially for those with limited IT or coding skills. On the other hand, reporting services that display general overviews of pass/fail rates, errors and issue tracking are valuable sources of information about general patterns and problems in a test project.

Finally, we looked at several topics regarding what can actually be automated within the scope of testing. Here we looked at test case creation, reporting and scheduling. Realizing the benefits of test automation can take time initially, but by going beyond automated test case execution and including other aspects of a project’s workflow, organizations can quickly see the value of investing in such software. Therefore, tools such as QiTASC’s intaQt®, conQlude and sQedule decrease activities that are prone to human error such as data entry for reporting, haphazardly selecting test cases for scheduling and creating models and templates of test suites that can automatically be propagated across projects. In doing so, QiTASC promotes increasing levels of automation that help our customers with diverse teams achieve their goals and get their products to the market faster and with higher quality than ever before.