3 Ways to interpret test results

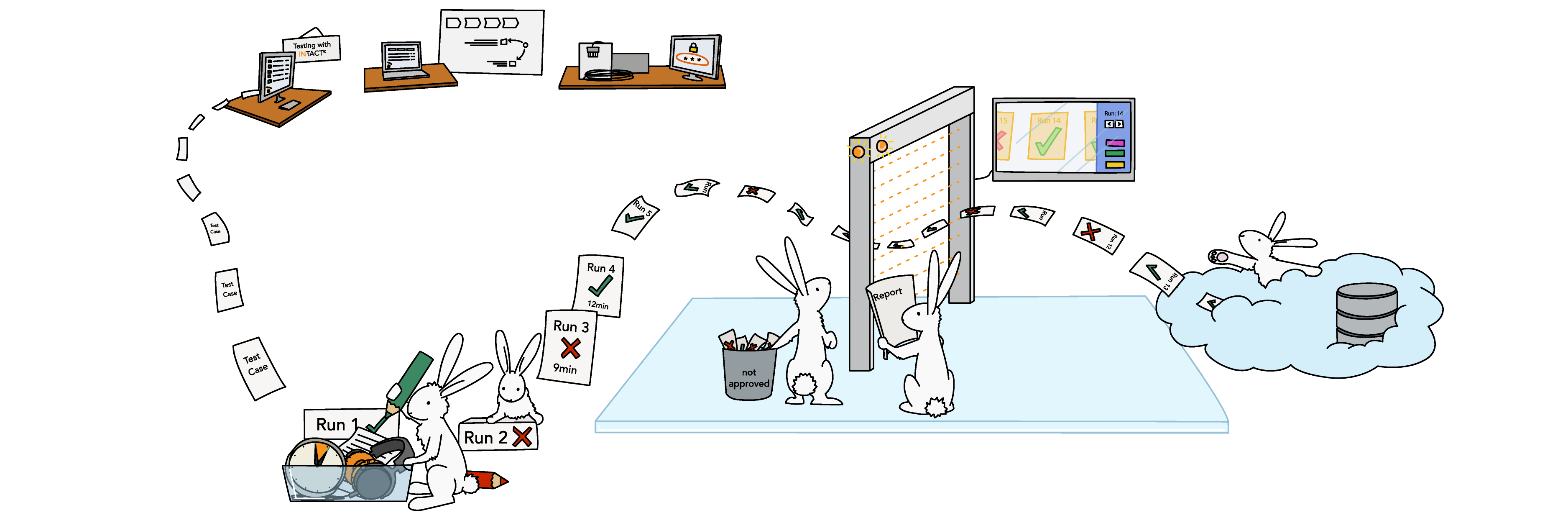

Aside from a simple pass/fail result, detailed test results take many forms, including logs, reports, and even extracts from other systems that were affected by the test. These can be interpreted in many ways, and test results and selected details may be sent to synchronized project or quality control management systems.

Test results and data are often difficult to interpret and troubleshoot — and sometimes even difficult to find. Additionally, output can vary depending on the language layers or levels of abstraction. For example, server logs display low-level and detailed information that is helpful for debugging, but are cumbersome for users to read through, especially if they have limited technical skills. On the other hand, reporting interfaces that show high-level information such as executed steps, their outcomes and error messages are much more accessible to all user types. Additionally, many teams synchronize their test output with external tools such as project management databases.

Option 1

Log output

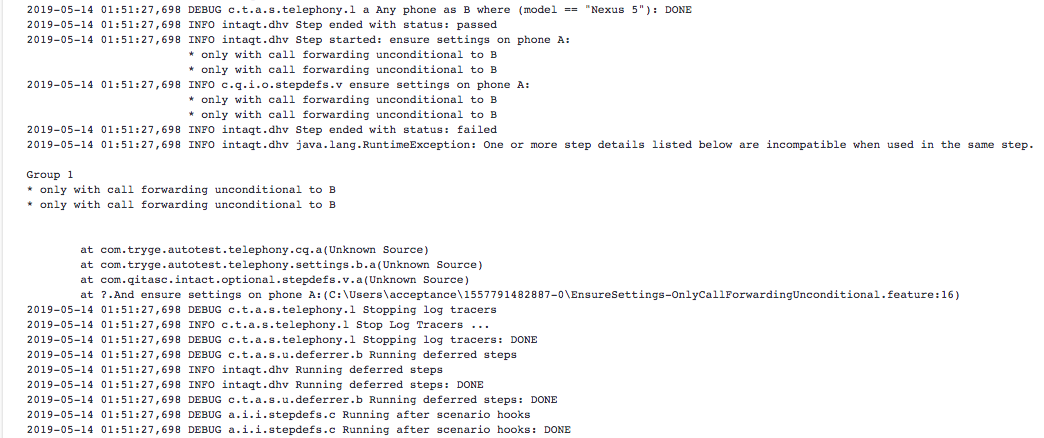

Log output, like in the example below, does contain an error message about incompatible step details when ensuring call forwarding. However, it might not be obvious how to read through the log it or what to look for. This is because such server logs include all log messages including protocol logs and debug information in addition to the test’s steps and their outcome.

Often developers or more advanced testers consult logs to find clues to failures or to check if any obscure issues are present in a test case and the system under test. While server logs aren’t exactly user-friendly to everyone, it’s likely that during the course of a test project, a senior tester or IT member will want to examine the logs.

Therefore, it’s important to know:

- What type of logs are generated by the test automation software

- If logs are available in a centralized location or only on the user’s machine

- Who can access the logs

- If log data is easily parseable

Option 2

Reporting and summaries

While having access to logs is critical for troubleshooting and debugging, having access to a quick overview of results from test cases and projects as a whole helps a lot with abstracting results and being able to get an immediate understanding of pass/fail rates, common errors and bug tracking.

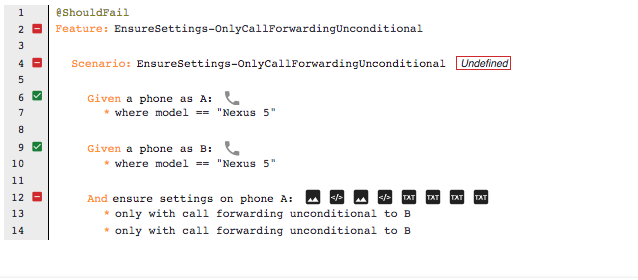

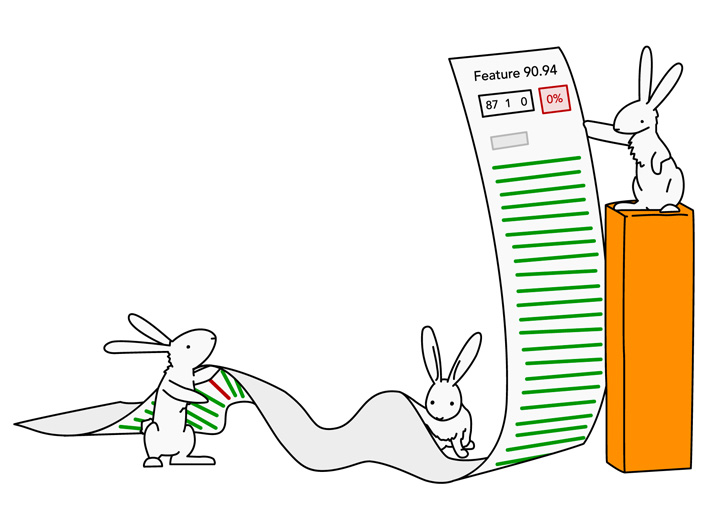

Because of the user-friendliness and ease of abstraction, reporting services are extremely useful for communicating results to non-technical audiences who are interested in a project’s outcome. For example, QiTASC’s conQlude reporting service would depict the same log output that we showed above in a report like the one below.

This report draws the user’s attention to the error category and on which step the test case failed. It does this by showing the Feature File that uses a high-level test language and highlighting important information.

Having the option to further define and group error categories is useful for several reasons:

- They give a general overview of common errors

- Categories that reflect user errors provide insight about training or re-training needs

- Error categories can be further grouped by additional criteria such as severity level, device types or test phase

Option 3

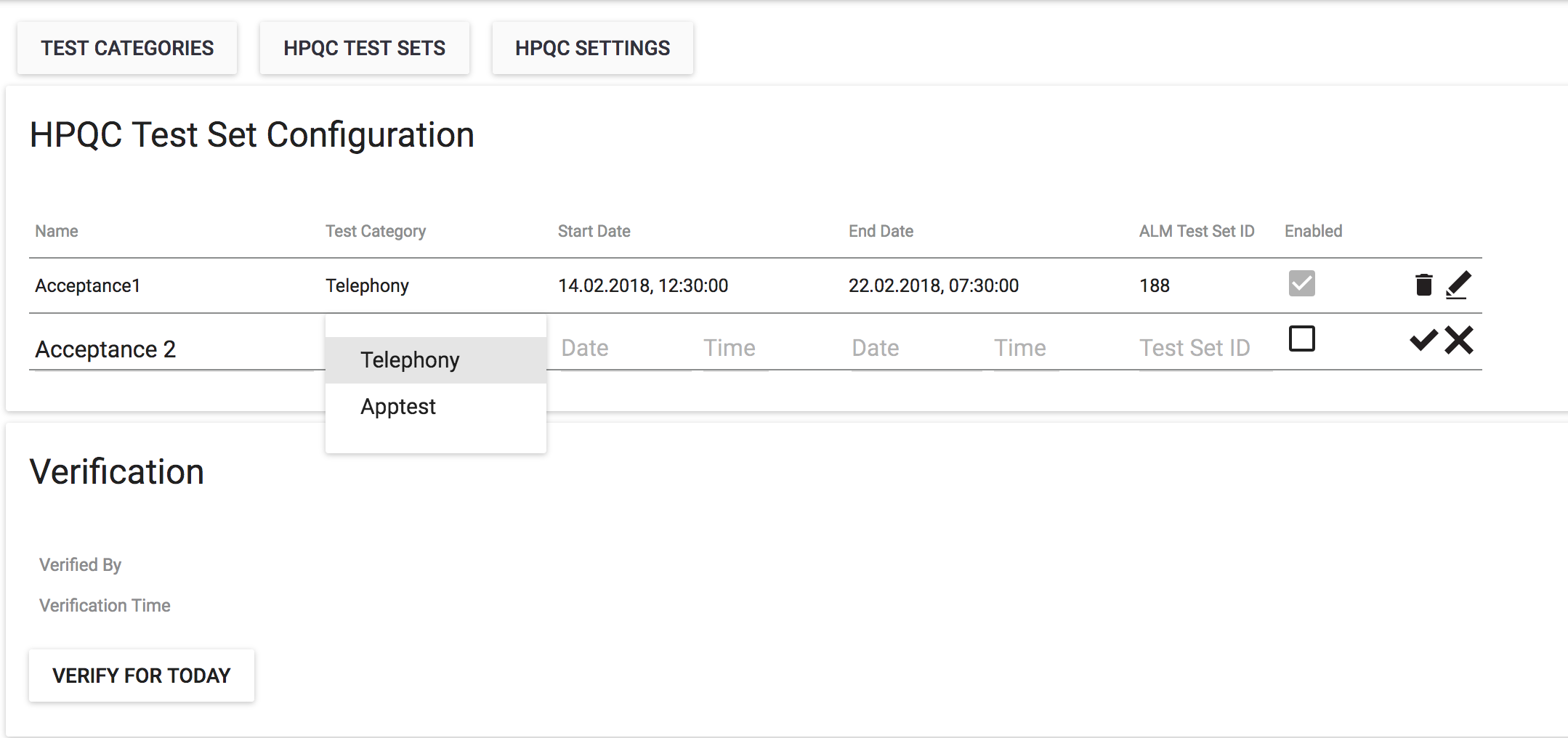

Synchronization with other tools and data export

In addition to the accessing test output within a test automation tool, teams often need to synchronize results internally or to external customers. For example, internally a team may use JIRA to track their test executions and defects, while the customer uses HP ALM. Certain reporting tools, such as QiTASC’s conQlude simplify this because it can automatically synchronize to both systems. Being able to download data as CSVs or other types of parseable data may also be important for data analysts and project managers who wish to track certain metrics or KPIs.

Is the test output relevant for my team?

Once you’ve taken a look at types of available test output generated by a test automation tool, you can evaluate if the levels of complexity are useful for your team, or if it would cause too much additional effort to interpret. For example, if you have an in-house data analysis team, log output without a reporting service might be sufficient. However, for smaller organizations, reporting tools provide clear summaries about a project’s progress and can be quickly and easily shared with non-technical stakeholders including customers and investors.

Test results can be interpreted from many different sources, especially from logs and reports. While logs contain a lot of raw data that can be difficult to read, they’re nevertheless critical sources of information for debugging and finding the root cause of failures. Reporting services, meanwhile, provide an overview of project results that can be quickly understood by interested parties, regardless of technical background. Finally, synchronizing test results with external tools like JIRA or HP ALM provides additional flexibility for further data analysis and lets teams integrate their test results into the larger scope of project management.