Articles, news and events

The latest trends in test automation, achievements and upcoming events. So as not to miss anything, subscribe to our newsletter.

Company news

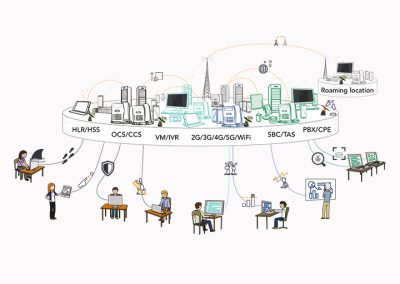

Mobile World Congress 2024

QiTASC attended the Mobile World Congress in Barcelona, February 26th to 29th 2024 and presented our latest achievements in test automation, such as the AI service and the Portable Smart Meter Lab Suitcase.